Michael Naimark

Sunday, 15 November 2009

Work from Viewfinder: How to Seamlessly “Flickrize” Google Earth.

“The tension between computing technology that augments human activity and technology that automates it goes all the way back to the 1960s.

It can be seen in Viewfinder, a demonstration of a photo-sharing or photo-placing system developed by a group of researchers and digital artists at the University of Southern California. The system, which was created with the help of a research grant from Google, is an intriguing alternative to Photosynth, a project developed in 2006 by Microsoft Live Labs and the University of Washington that automated the proper placement of two-dimensional digital photographs in a three-dimensional virtual space.

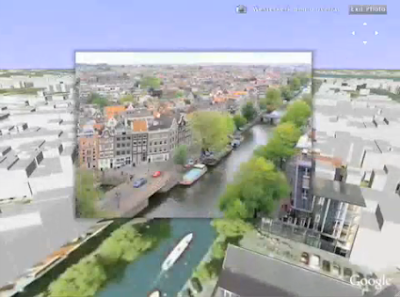

Demonstration video showing the possibilities of Viewfinder. (Viewfinder Project)

In contrast, Viewfinder, which is not yet a commercial service, is intended to make it simpler for users to manually “pose” photos in services like Google Earth — to place them in the proper location and at the original angle at which they were taken. It is already possible to insert photos into Google Earth, but the researchers said their goal was to make the process an order of magnitude simpler.

“We specify that a 10-year-old should be able to find the pose of a photo in less than a minute, and we are convinced that this goal is achievable,” the researchers noted in a progress report Thursday.

Google, Microsoft and Yahoo have all created 3-D world maps that allow users to virtually “fly” over the surface of the earth and view satellite and aerial imagery. The services are being expanded to include 3-D topography as well as 3-D buildings for some locations. The Viewpoint idea would essentially merge a photo-sharing service with a mapping service, making it possible for users to see what a particular point on earth looked like at a particular time.

The team of Viewfinder designers is a collaboration between the Interactive Media Division at U.S.C. and the Institute for Creative Technologies.

The project is made simpler by the widespread availability of geotagged digital photos, those that have been tagged with geographical coordinates indicating where they were taken. However, the researchers say that today such photos are generally treated as hovering “playing cards” in 3-D models, giving the world a flat 2-D sensibility.

The goal, the researchers wrote, is an experience that is both as compelling as Google Earth and as accessible as the photo-sharing service Flickr. The result is photos that appear perfectly aligned — and conceivably even transparent — to the underlying 3-D world.

“This is an attempt to viscerally and emotionally plant your picture in a virtual world,” said Michael Naimark, a research associate professor at the Interactive Media Division and the director of the Viewfinder project.

He said the group had patented its work, but had not attempted to commercialize it.

“We’re rabble-rousing,” he said. “This is as much an artist’s intervention as a technological invention.”

While photos are placed in Viewfinder, Microsoft’s Photosynth performs the same task by using image recognition and related artificial intelligence techniques to automatically analyze photos to create a 3-D “point cloud.” The user can then visually hop from one image to the next.

“We’re totally impressed by Photosynth,” Mr. Naimark said, but he added that people-centric techniques like “crowd-sourcing” are now giving traditional artificial intelligence a run for its money.

He envisions a world in which cameras not only have geotagging capabilities but also directional sensors that will make Viewfinder a more powerful way to enrich current 3-D world models.” – John Markoff for the New York Times